Googlebot’s 2MB Limit: Why You Don’t Need to Worry

A quick summary:

- Some noticed Google documentation saying Googlebot now had a 2MB crawl limit. But...

- This is not a new development; it's just the document itself was updated to clarify the rule.

- Most websites fall way below this threshold anyway, so you should be safe.

The Googlebot limit in a bit more detail

Just a brief one for now, lest this topic run away from us.

A few days ago, word was going around in the SEO and digital marketing community that Google - specifically its Googlebot crawler - now had a crawl limit of 2MB per file. This seems to have led some to believe there had been a sneaky update, and that this new limitation may have consequences for websites.

As noted by this LinkedIn post, as of February 5, 2026, it looked as though Google had changed its limit, dropping it from 15MB to a much more paltry sounding 2MB. At a cursory glance, it appears as though this is a significant change to the way Google crawls and indexes websites.

However, there is no cause for alarm.

In its own documentation files, Google itself says its crawlers only scan the "first 15MB of a file," and that "any content beyond this limit is ignored." So that limit is still in place, as of this article going live.

In addition to this, Googlebot (the bit that's actually used to index websites) has a smaller crawl size of 2MB, with 64MB being allowed for PDF files.

What seems to have happened is Google has simply updated its changelog to clarify certain things, making the rules more specific about what's crawled, as noted by Search Engine Journal.

Why you don't need to worry

To that end, there is no cause for alarm. However, if you are concerned that 2MB is not a lot of wiggle room for your website, especially if it's quite sizeable, let me give some reassurance.

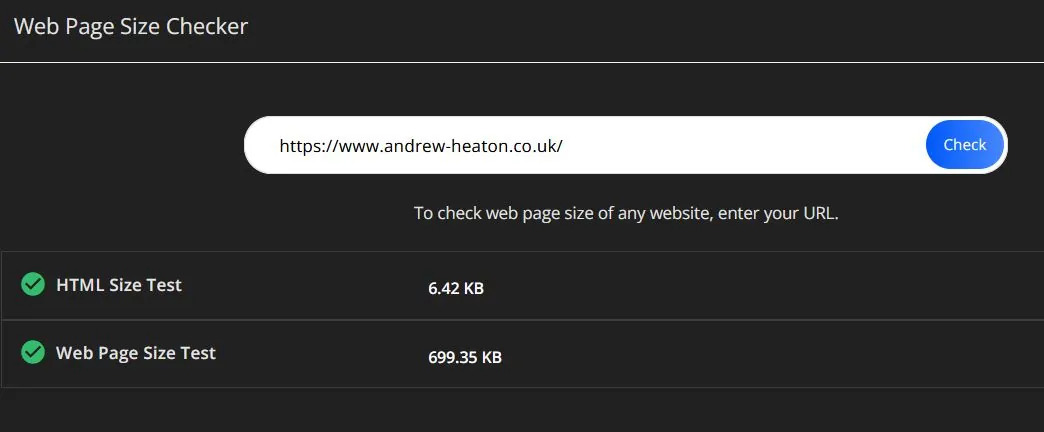

Take a look at the screenshot below:

In total - including all images and text - my website clocks in at just under 700 KB. Now, as I'm hardly taking up a ton of server real estate on Journo Portfolio, it's tempting to say that I'm an outlier.

But what about if your website has a ton of images and videos, a huge database, JavaScript applets, dozens of product pages?

Well, you should still be safe.

A more recent article on Search Engine Journal (I am all about the linkages, today) says that the global, median average for a website, in terms of "raw HTML," is around 33 kilobytes.

Now, metrics may vary and the research could pull different numbers based on different factors, but unless we're running something as huge as Amazon, the vast majority of us will probably never need to concern ourselves with a 2MB Googlebot crawl limit.

Of course, that doesn't mean you can't be a little bit cautious. There's absolutely no harm in having a look at your own website to see if you can't perform a bit of "deadheading." Any reduction in file sizes is only going to make things better in the long term.

If you would like me to have a look at your website to see if there are ways to optimise it for performance, I offer SEO audits and other services. Do get in touch.

0 Comments Add a Comment?